Privacy-by-Design Principles in Modern Computer Vision Systems

Privacy by design has emerged as a fundamental expectation in computer vision. The manner in which organizations design, deploy and scale technology and systems that impact people’s lived experiences has transformed. As visual data animates smart cities, health diagnostics and retail analytics, public sentiment of privacy is on the rise, and international laws such as GDPR and the CCPA require strict privacy controls throughout the technology stack. Whether you are engaging a Computer Vision Development Company or utilizing AI Development solutions, incorporating privacy by design in the DNA of your product is essential for compliance, user trust, and ultimately the success of your business.

7 Principles of Privacy-by-Design

Privacy-by-design serves as a guide for engineers and business leaders—responding proactively rather than reactively; anticipating risk, minimizing exposure, and providing users real control at every step of the way.

1. Proactive, not Reactive

Systems anticipate privacy issues and prevent them rather than wait until the privacy invasion occurs. Methods such as privacy threat modeling, regular assessments, and early stakeholder involvement enable privacy-implicated design decisions to be done proactively as cognizant decisionmakers, rather than only remedial and after the privacy harm has occurred.

2. Privacy as the Default

By design, the user’s visual data is protected, collected only when necessary and only as specified. The user will not be expected to do anything for their privacy; it will be “on” by design.

3. Privacy Embedded into the Design

Privacy is built into the design, not an afterthought. The technologies and processes that will be selected will serve to protect data at every point in the development and deployment life cycle, not just privacy treated as an add-on feature or an afterthought.

4. Full Functionality

The goal is to achieve positive-sum outcomes – privacy and functionality. We will ensure that privacy does not compromise the fundamental, expected, and important security, analytics, or business needs. The means to actually allow positive-sum outcomes will be valued not a false trade off that may threaten the quality of service.

5. End-to-End Security

Throughout the data lifecycle—data collection, transport, processing, storage, and deletion—it is always protected by design. Data encryption, access controls, and secure data deletion are built into the system upfront and drive down risk of exposure from cradle to grave.

6. Visibility and Transparency

Stakeholders, regulators and users have insight into practices: what data has been collected and how it is stored, and how algorithms operate. Processes that are transparent can enable third-party audited reviews, informed user consent, and efficient redress.

7. User Privacy Respect

Users are treated as partners, entitled to access, correct, and delete their data. System controls are user friendly, privacy policies are user friendly, compliance with user rights is the default, and user controls are intuitive.

Why Privacy is Critical in Computer Vision?

Computer vision employs the use of incredibly sensitive data, and this can include facial recognition and intelligent retail analytics. The application of computer vision is broad, with applications across sectors and stakeholders, which creates its own unique set of challenges.

The Sensitivity of Visual Data

Visual data is uniquely sensitive because of the various aspects it often embeds such as biometric identifiers, behavioral characteristics, and context. Visual data can provide much greater significance than text data extracted verbatim from recordings. The ramifications of unethical use or disclosures are detrimental to a personal or legal level.

Growing Use Cases

Computer vision is entering corridors such as smart homes, autonomous vehicles, hospitals, etc. The expansion of computer vision use cases is substantial. This represents a dramatic increase in the potential for privacy exposure. The flow of data could involve third parties as well as some data stored and processed via cloud and IoT devices, which heightens existing risks at several different levels and makes the associated regulation more complex and cumbersome.

Regulatory Pressure

Countries around the globe are enacting new privacy laws; these laws typically prescribe explicit consent to process personal data, data minimization, and sustained and understandable audit trails of data use and processing. Consequently, companies subject to these laws face risk and penalties (for example, fines of up to 4% of total annual turnover under the General Data Protection Regulation (GDPR)) as well as reputational risk should they fall short of the objective of privacy-centric product design.

Trust and Adoption

End-users, clients, and partners are more likely to adopt and recommend solutions that demonstrate an observable commitment to privacy. In sum, trust drives adoption, fuels brand development, and sustains the ecosystem.

Principles of Privacy-by-Design in Computer Vision

In order to implement privacy-by-design, you need to incorporate specific best practices into your development, training and deployment:

1. Data Minimization

Only gather the data necessary for the operation of your system. Limit how long you store it, and do not keep raw or high-resolution video if it is not needed.

2. Anonymization and Pseudonymization

Substitute other identification data and hashes for biometric data, or other identifiers, for anonymized data. Blurring or redacting data should occur at the point of capture, or before it is uploaded to the cloud, on edge devices.

3. User Consent and Control

Let users know what personal information you will collect and how it will be used, and provide simple methods for opting in or withdrawing consent. Provide options for access, correction and deletion, and easily interpretable dashboards.

4. Security Throughout the Lifecycle

Use strong encryption both while the data is in transit and at rest. Implement tamper-proof logs and conduct periodic penetration testing to assess the integrity of your system.

5. Transparency and Explainability

Provide open documentation, API transparency, and explainable decisions to regulators and users. Record and maintain model updates, log inference results, and log data access for trustworthy audits.

6. Accountability and Auditability

Assign team members responsibility for privacy practices. Conduct regular privacy reviews and impacts, and keep audit logs for compliance and continuous improvement.

7. Contextual Integrity

Adapt privacy safeguards to the context (medical vs retail vs smart city); for example, the measures may need to vary significantly. Do not use data for secondary purposes or repurpose without user knowledge and explicit consent.

Techniques That Enable Privacy-by-Design in Computer Vision

Adopting new, privacy-first engineering practices can help enhance the principles presented above:

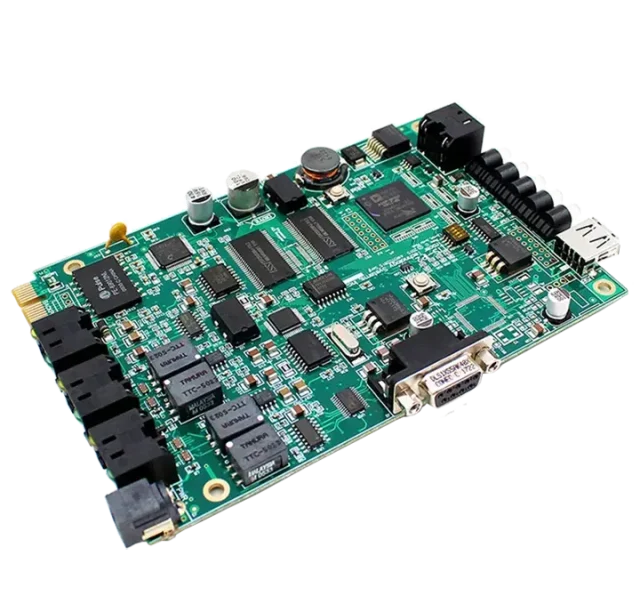

Edge Computing –

Processing data on edge devices, such as inside cameras or on gateways, reduces exposure by only sending metadata or anonymized results, and hasn’t stored sensitive footage in the cloud.

Federated Learning –

A user model will be trained on-device; the centralized aggregation only contains model parameters, not visual data. It ensures user data never leaves the user’s device or premises.

Differential Privacy –

As per the experts providing machine learning development solutions, it adds statistical “noise” to the data so that users cannot be re-identified in aggregated data analytics – many healthcare and public datasets use differential privacy.

Homomorphic Encryption –

It enables computation without decrypting the data, so it protects very sensitive information during processing for analysis and providing third-party access.

Synthetic Data –

It is a realistic but artificial dataset used to train models, and protecting privacy while it promotes fairness for user representations by securing personal identities, especially if the dataset represented an under-represented group.

Final Thoughts

Privacy-by-design principles in computer vision are not just optional; they are essential as a differentiator, regulatory requirement, and imperative of public trust. Applications of the future, whether built by a Computer Vision Development service provider, through integrated AI Development Services, or built with advanced Machine Learning Development, are those that consider the rights of users, the safety of users, and compliance to regulations at every stage of the process. The best systems are designed to anticipate risk, mitigate data loss, and advance user agency, realizing the benefits of visual AI while securing the users it serves.